by Anna D. Havinga

“Digital Humanities” (DH) has become a vogue word in academia in the last few decades. DH centres have been set up, DH workshops and summer schools are held regularly all over the world, and the number of DH projects is increasing rapidly. But what is all the fuss about?

What is Digital Humanities?

There are numerous articles that discuss what DH is and is not. It is generally agreed that just posting texts or pictures on the internet or using digital tools for research does not qualify as DH. There are, however, few works that give a concise definition of DH. Kirschenbaum quotes a definition from Wikipedia, which he describes as a working definition that “serves as well as any”. In my view, the definition for DH on Wikipedia has even improved since 2013, when Kirschenbaum’s article was published. I believe it now captures the essence of DH more accurately:

[…] [A] distinctive feature of DH is its cultivation of a two-way relationship between the humanities and the digital: the field both employs technology in the pursuit of humanities research and subjects technology to humanistic questioning and interrogation, often simultaneously. Historically, the digital humanities developed out of humanities computing, and has become associated with other fields, such as humanistic computing, social computing, and media studies. In concrete terms, the digital humanities embraces a variety of topics, from curating online collections of primary sources (primarily textual) to the data mining of large cultural data sets to the development of maker labs. Digital humanities incorporates both digitized (remediated) and born-digital materials [i.e. materials that originate in digital form, ADH] and combines the methodologies from traditional humanities disciplines (such as history, philosophy, linguistics, literature, art, archaeology, music, and cultural studies) and social sciences, with tools provided by computing (such as Hypertext, Hypermedia, data visualisation, information retrieval, data mining, statistics, text mining, digital mapping), and digital publishing. (https://en.wikipedia.org/wiki/Digital_humanities)

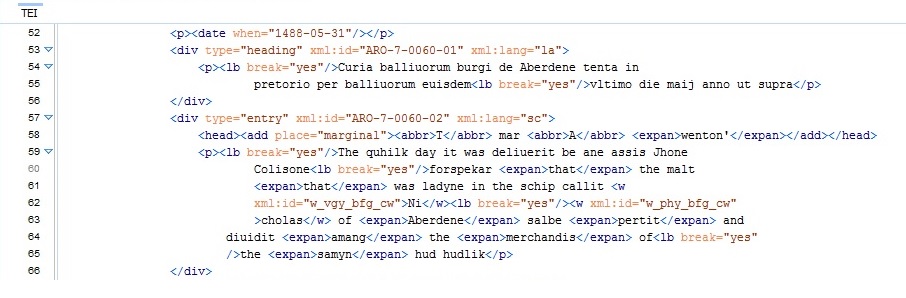

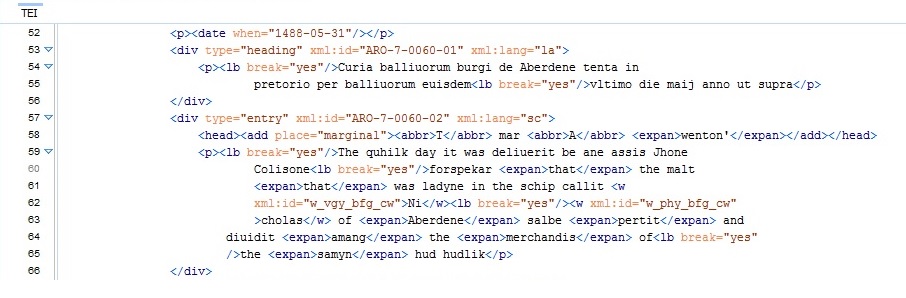

Our Law in Aberdeen Council Registers project can serve as a prime example for a DH project: We create digital transcriptions of the Aberdeen Burgh Records (1397–1511) with the help of computing tools. This means that we type the original handwritten text into a software programme in a format that can be understood by computers. More specifically, we use the oXygen XML editor with the add-on HisTEI to create transcriptions that are compliant with the Text Encoding Initiative (TEI) guidelines (version P5). In this way, we produce a machine-readable and machine-searchable text. But what benefits does this have? Why do we go through all this effort when the pictures of the Aberdeen Burgh Records are already available online?

What are the benefits of a digital, transcribed version of a text?

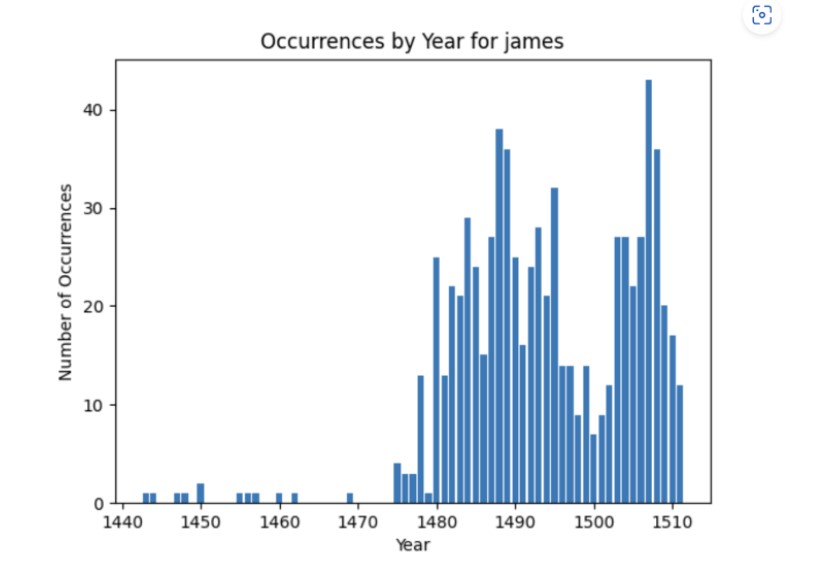

Apart from the obvious benefit of a digital, transcribed version of text being much easier to read than the original handwriting, it allows for information to be added to the text. With the help of so-called ‘tags’, a text can be enriched with all kinds of structural annotations and metadata. Tagging here means adding XML annotations to the text. For example, the textual passages in the Aberdeen Burgh Registers, which are mainly written in Latin or Middle Scots, can be marked up as such, using the ‘xml:lang’ tag. A researcher who is interested in the use of Middle Scots in these registers could then search for and find all Middle Scots sections in the corpus very easily with the help of a text analysis tool such as AntConc or SketchEngine without having to plough through the sections written in Latin. More generally, enriching the text with tags means that a researcher does not have to read through all of the over 5,000 pages of the Aberdeen Council Registers that we will transcribe in order to find what s/he is looking for. A machine-readable and machine-searchable text does not only save time when researching a particular topic but is also generally more flexible than a printed version of text as further tags can be added and unwanted tags can be hidden. Furthermore, a digital text allows us to ask different questions of a text corpus. It is those possible questions plus a variety of other issues that have to be considered before embarking on a DH project.

Transcription of volume 7 of the Aberdeen Counil Registers (p. 60), annotated with XML tags

What has to be considered when setting up a DH project?

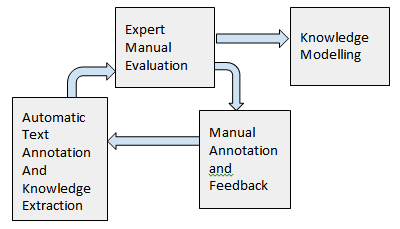

There are several major questions that have to be considered before starting a DH project of the sort we are carrying out: What is it that you want to get from the material you work on? Who else will be using it? In what way will it be used? Which research questions could be asked? Information on the possible users of the born-digital material is essential in order to decide which information should be marked up in the corpus of text. This is, of course, also a matter of time (and money) since adding information to the original text in form of tags takes time. The balance between time and enrichment has to be determined for each individual DH project. In our project we decided to go through different stages of annotation – starting with basic annotations (e.g. expansions, languages) first and adding further tags later (e.g. names, places etc.). Also, users will be able to add further annotations that may be specific to their research projects. Beyond these considerations, choices about software and hardware, tools, platforms, web development, infrastructure, server environment, interface design etc. have to be made before embarking on the DH project. Anything that is not determined at the beginning of the project may lead to considerable efforts at a later stage of the project.

It is certainly worth going through all this effort. To us it is clear why DH has become such a big thing. It eases research, extends the toolkits of traditional scholarship, and opens up material to a wider audience of users. With tags we can enrich the content of texts by adding additional information, which can then change the nature of humanities inquiry. DH projects are by nature about networking and collaboration between different disciplines, which is certainly the way forward in the humanities.